This paper describes a set of error types associated with human and machine cognition of sequences of passive observations and observations of responses to stimuli in computer and computer network environments. It forms the foundation of a theory for the limits of deception and counterdeception in attack and defense of computer networks and systems.

As part of the effort to understand the limits of cognition and the issues related to deception and counterdeception in the information arena, this paper examines a model of error types associated with this sort of cognition. The belief in the need for some characterization of error types flows from the progress made in fault tolerant computing when the idea of creating a fault model was first introduced. Initial fault models were based predominantly on stuck-at faults in which memory bits and inputs or outputs of digital logic gates were stuck at either an on 'ON' or an 'OFF' state. While these models were not comprehensive, they did cover a significant portion of the space of real errors in digital systems and they permitted systematic analysis of the fault space which could then be mathematically driven through sets of equations associated with a design in order to create test sets, perform automated diagnosis, and analyze designs and implementations for theoretical error conditions that were found in the real world. In a similar manner, this paper proposes a model of error types associated with the cognitive processes undergone by computer software, humans, and organizations, in the hope that it will lead to useful models and perhaps deeper mathematical understanding of the challenges and solutions associated with deception and counterdeception in this arena.

Our previous review of the history of deception and the cognitive issues in deception [1] discussed much of the previous work in understanding issues in human cognition. The wide range of experiments done on perception in the visual, sonic, olfactory, and tactile systems indicates that some set of simple rules can be used to model the cognitive systems underlying these perceptual domains and that specific error types can be induced in these systems by understanding and inducing the misapplication of these rules. For example, the human visual system observes flashes of light associated with photons striking the optic nerve and emits impulses into the brain. These impulses are processed by neural mechanisms to do things like line detection and motion detection. If the line detection mechanism detects two line segments whose end points are coincident in both eyes (or one if the other is disabled), the cognitive system interprets this as a contact between those two lines in 3-dimensional space. Common optical illusions involve the creation of situations in which observers are constrained in their perspective so as to observe things like two line segments whose end points are co-incidental but in which the actual mechanical devices are not touching. An object can then be 'passed through', seemingly by magic.

It is the thesis of this paper that a very similar set of processes exist in the human and machine cognition of information such as network traffic and machine state and that, with sufficient understanding of these cognitive systems, a set of identifiable error types can be selectively and reliably induced by exploiting these processes. Similarly, we hope to find methods to seek out the limits of the complexity of creating deceptions in this manner and of countering deceptions through selective observation and analysis of observables.

Initial experiments on deception indicate that errors can be induced [2] and more recent experimental results indicate that these errors can be induced in such a manner as to drive subjects through specific and predictable behavioral sequences. [3] These experiments were undertaken under the assumptions about error types discussed in this paper and thus act as a limited confirmation of the model's validity in terms of its relationship to real-world faults at some level of abstraction.

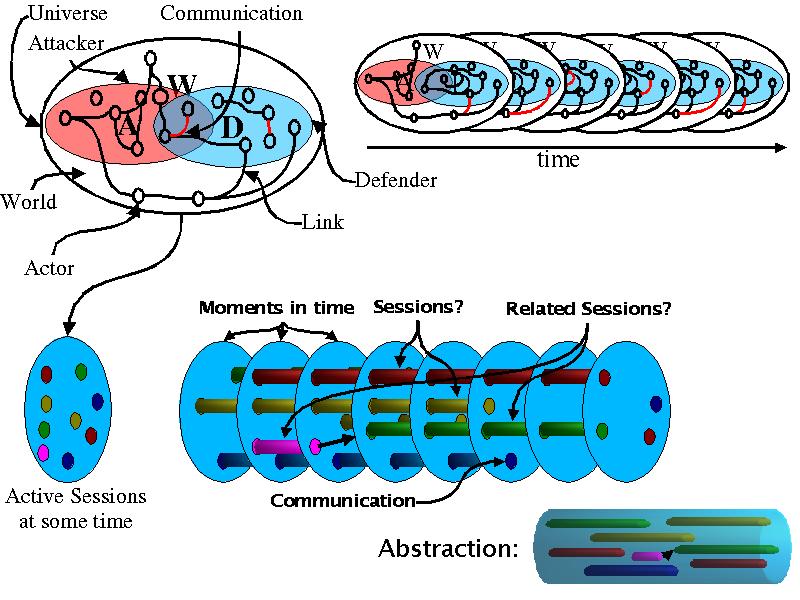

We wish to consider attacks by an organized set of actors (people, other creatures, their technologies, and their group interaction mechanisms) against some other organized set of actors. We will call the group forming the system under attack the 'defender' (D) and the group attacking the defender the 'attacker' (A). By this we mean that some set of processes and capabilities are being applied by the attacker to try to understand something (in the semantic sense) about the defender. Interactions between attacker and defender may operate through the rest of the world (W), and actors and their interactions may be part of both the attacker and the defender. We assume that the cognitive systems of attacker and defender and the operation of the rest of the world can be modeled to an adequate degree of resolution by some sort of state machines:

All possible n dimensional state spaces:

S* := {Su.0, Su.1, Su.2, ...}, all Su.x in S* => Su.x in R^n

; An infinite number of possible state spaces

; n-dimensional real (aleph 1 sized) things (perhaps)

Su.0 := {su.0.0, su.0.1, su.0.2, ...}

Su.1 := {su.1.0, su.1.1, su.1.2, ...}

...

where su.x.y in R^n

In this notation, x may be thought of as time and y as the name of the state variable

Universe:

U := ({Su, t, Fu}, Fu.n: t x Su.n => Su.n+1, t: Fu.n x Su.n => Fu.n+1)

The universe can, presumably, change the totality of states and state transitions with time. For convenience, we will drop the '.n' notation when a statement applies for all n. We also use '->' for implication and '=>' for a mapping. For the purposes of our discussion, we are concerned with attackers, defenders, and others, each of which comprise subsets of the universe:

The Universe:

U: ({Sa.0, Sd.0, Sw.0}, {Sa.1, Sd.1, Sw.1}, ...) in S*

Su := Sw union Sd union Sa

Fu := Fw union Fd union Fa

Interfaces:

Ia in Sa, Id in Sd, Iw in Sw

Iad := Ida := Ia intersect Id

Iaw := Iwa := Ia intersect Iw

Iwd := Idw := Iw intersect Id

Ia union Id union Iw = {}

Iad union Iaw = {}, Iwd union Iwa = {}, Ida union Idw = {}

Attacker, Defender, World, and Universe

A := ({Ia, Sa, Fa, t} Fa.n: t x Ia.n x Sa.n => {Sa.n+1, Ia.n+1}, t: Ia.n x Sa.n x Fa.n => Fa.n+1)

D := ({Id, Sd, Fd, t} Fd.n: t x Id.n x Sd.n => {Sd.n+1, Id.n+1}, t: Id.n x Sd.n x Fd.n => Fd.n+1)

W := ({Iw, Sw, Fw, t} Fw.n: t x Iw.n x Sw.n => {Sw.n+1, Iw.n+1}, t: Iw.n x Sw.n x Fw.n => Fw.n+1)

for all x in Su.n, x in Id.n and x in Ia.n -> x = x

for all y in Su.n+1, y in Id.n+1 and y in Ia.n+1 -> y = y

for all x in Su.n, x in Id.n and x in Ida.n -> x in Ia.n

and similarly for A, W

We will represent these as:

A.n => A.n+1, D.n => D.n+1, W.n => W.n+1

A sequence of k steps is represented with k in front of the arrow:

A.n k=> A.n+k, D.n k=> D.n+k, W.n k=> W.n+k

which may also be anotated as F^k

Also note that Iad, Iaw, and Iwd directly constrain possible Fa, Fd, Fw

The 'Interface' states (Iw, Ia, and Id) are shared states between state spaces. Thus communication consists of changes in the shared states. Talking is different than touching. In touching communication is direct via the shared portion of space at the interface between the parties. In talking, communication is indirect in that the shared portion of state that changes at the inteface between the talker and the world results in state changes in the world which result in state changes at the shared states between the world and the listener.

The actors and their interaction technologies are physical sets of objects in a multidimensional (we normally think in terms of 3 of them), unlimited and ever-changing universe with infinite granularity. Thus everything is of size at least Aleph-1 in three spatial dimensions and 't' (time or more generally some 'thing') changes the elements of those spaces as well as their states. While a more elaborate model of the universe (perhaps using super string theory) might be technically more accurate, this will do for our purposes. The topology, membership, and state of the elements of the subspace of the universe comprising the attacker, defender, and world also change over time. The attacker, defender, and world may share some parts of the universe, which we will call their interfaces (I).

The human visual system observes flashes of light that strike the eyeball (i.e., state changes at the interface between the world and the actor). It uses cognitive mechanisms to turn those flashes into sets of semantic entities such as representations of chairs and tables within the context of visualization. Similarly, the attacker uses its cognitive mechanisms to turn observables (i.e., state changes at the interface) into sets of semantic entities such as types of information systems and communications protocols within the context of its attacks. In terms of the state machine description: Iaw.n-1 => Iaw.n AND Sa.n k=> Sa.n+k. But in terms of what we are discussing, we need the additional notion of models.

The 'static case' with respect to U, A, D, and W assumes that U does not change with t (i.e., Su.n = Su.n+1 and Fu.n = Fu.n+1) and that A in U, D in U, and W in U do not change their state spaces (i.e., A.n = A.n+1, D.n = D.n+1, W.n = W.n+1). In this case, A, D, and W can be treated as state machines wherein the total set of states and state transitions for each remains the same and only their state values change. Other static cases may arise, but this one is particularly useful because it represents the most easily analyzed case.

Static case w.r.t. U, A, D, and W:

A := ({Ia, Sa, Fa}, Fa: Ia x Sa => {Sa, Ia})

Ia := (Sa intersect (Sd union Sw))

D := ({Id, Sd, Fd}, Fd: Id x Sd => {Sd, Id})

Id := (Sd intersect (Sa union Sw))

W := ({Iw, Sw, Fw}, Fw: Iw x Sw => {Sw', Iw'})

Iw := (Sw intersect (Sa union Sd))

Within the general framework, we can describe the basics of observation. We start with direct observation. In direct observation, there are shared states between A and D and the values and changes in values of those states are directly available to A and D. In the case of an attacker observing a defender, the process goes like this:

sd.n.m in Ida.n = sa.n.m in Ida.n

To the extent that state changes in D are reflected in state changes in Ida and that those changes are reflected in Ia, we can add additional steps to the process as follows:

Sd.x y=> Ida.x+y z=> Ia.x+y+z

In the case of indirect communication, the world intervenes and the process expands to:

Sd.x y=> Idw.x+y z=> Iw.x+y+z a=> Sw.x+y+z+a b=> Iwa.x+y+z+a+b c=> Sa.x+y+z+a+b+c

In general, for each of the 'items' U, A, D, W, a in A, d in D, and w in W, cognitive systems are mechanmisms for making mappings between real situations and models of situations. Each of these items can stay constant or change as a function of t and F. We notate constancy '[]', change '<>', realities '[]r, <>r', models '[]m, <>m', mappings '~', exact matches '=', and inversion '!'.

A model is a mapping from the set of 'real' states, state spaces, functions, and times of interest into a set of 'model' states, state spaces, functions, and times. Models are created for some purpose. The suitability of the model to the purpose and the accuracy with which the 'desired' mapping is met by the 'actual' mapping are functions of the model in use. Thus:

Symbols Meaning [U]r The state of the real universe <U>r Changes in the real universe [U]m The state of a model of the universe [U]r ~ [W] A mapping from [U]r to [U]m [A.n => A.n+1]r The real constancies of A over t from n to n+1 <A.n => A.n+1>m A model of changes of A over t from n to n+1 <F.n => F.n+1>r ~ <F.n => F.n+1>m The mapping from real changes in F from n to n+1 into a model of those changes.

Since models in our case are subsets of A and D, the following are always guaranteed to be true:

[U]r != [U]m , <U>r != <U>m

That is, the reality of the universe is not identical to the model of the universe because the model cannot retain enough states to be precise. As a result, constancies and changes in the real universe do not always get reflected in constancies and changes in the model.

For cases where A and D are smaller than W (certainly the case in most situations), for the same reason as for the case of the whole of U, the following are also true for both A and D:

A: [W]r != [W]m, <W>r != <W>m D: [W]r != [W]m, <W>r != <W>m

Similarly, because of the nature of uncertainty about knowledge of states of the universe, changes in the reality cannot be perfectly reflected in changes in the model:

for A: [D]r != [D]m, <D>r != <D>m for D: [A]r != [A]m, <A>r != <A>m

The total number of possible models is limited only by the number of sequential machines possible within A or D, the maximum complexity of F, and t. For general purpose mappings, this is the power set of the number of overall state values, but physics limits state transitions to physically proximate states, so this is only an upper bound. Clearly we cannot explore all possible models (as defenders, our exploration of models is part of the general effort to model A in D), but we can look at what we believe to be a fruitful set of models of situations we observe in the world.

With this addition of modeling we can make a more useful characterization of the processes of direct and indirect observation and experimentation and start to talk about the nature of errors in models as well as in the underlying physics and mathemtics of systems with states. We begin with the general characterization of errors.

All cognitive systems are limited. Whether it is a

result of finite memory, time, granularity, observables, performance,

operational range, design, or other factors, errors are possible in all

such systems. At identified cognitive 'levels' [1]

of current and anticipated systems, we can identify specific errors and

error types. A complete set of 'errors' relative to a model can

be constructed of differences between the 'desired' and 'actual'

mappings between items and the models of those items, both in terms of

their states and state spaces and changes in their states and state

spaces. We then write the general set of errors in terms of the

'desired' mappings as:

Errors := for each item i from U, A, D, W, a in A, d in D, w in W

[i]m !~ [i]r

<i>m !~ <i>r

In other words, the complete set of possible errors consist of all cases in which the mapping of constancy and change of states, state spaces, functions, and times in the real system into desired equivalent constancy and change of states, state spaces, functions, and times in the model are not desired mappings.

Assume that the attacker seeks to covertly collect observables and fuse these observations into a semantic model of what is taking place in the defender without inducing any signals into (or responses by) the defender. This may either be a case of direct observation:

Iad.n

Idw.n k=> Sw.n+k j=> Iwa.n+k+j

As a notational convenience we may write this as:

Id => Sa

<Ida>r

<Idw>r => <Sw> => <Iwa>r => <Sa>

Observables:

{[Ia.k]r, <Ia.k>r} ({<Sa.k>r, [Sa.k]r}, {<Sa.k+1>r, [Sa.2]k+1}, ...) ({<Sa.k>m, [Sa.k]m}, {<Sa.k+1>m, [Sa.k+1]m}, ...) {([sa.i.x]m, [sa.j.x]m, [sa.k.x]m) ([sa.i.y]m, [sa.j.y]m, [sa.k.y]m) ...} ({<X>r, [X]r} !~ {<X>m,[X]m}) < ({<X>r, [X]r} ~ {<X>m,[X]m})In other words, we assert implicitly in the use of this model that a situation in which there is a mapping from reality to the model is never worse for the modeller than a situation in which there is not a mapping from reality to the model. We cannot, at this point, prove any such thing, however, assuming we are talking about 'desired' mappings, our definition of an error (from above) is:

[i]m !~ [i]r OR <i>m !~ <i>r

thus, there are attacker errors whenever:

[Sd.k]r !~ [Sa.k]m OR <Sd.k>r !~ <Sa.k>m

By the nature of the process described above, the time delay associated with transmission of information from D to A guarantees that there is a time lag between some element(s) <Sd>r and <Sa>m unless D subset A or A is so good at modeling D that the model always correctly anticipates the next observable. But in this case, no observation is necessary because A always accurately preducts changes and state associated with its model of D. There are cases in which real attacks have models that ignore observables from D, however, these rarely result in success in any real sense for attackers.

The processes above provide for several different opportunities for errors. Specifically, for Ida:

[Id]r = [Ia]r AND <Id>r = <Ia>r

Thus the only opportunities for errors are in:

[Id.k]r j=> [Sa.k+j]m AND <Id.k>r j=> <Sa.k+j>m

Possible error sources include time-related errors (latentcy), timing-related errors (jitter and misordering), inaccurate mappings, made or missed constancies and changes, and modeling fidelity errors are all possible. Assuming that the model makes sessions, relations, and content, errors also include make and miss sessions, make and miss associations, and make and miss content. All of these can be modeled in terms of constancy and changes in the transforms (F^j) relative to the desired model. For example, [Id.k]r j=> [Sa.k+j]m is the same as:

(Id.k, Sd.k, Id.k+1, Sd.k+1, Fd.k: Id.k x Sd.k x Fd.k => {Sd.k+1, Id.k+1, Fd.k+1})

(Ia.k, Sa.k, Ia.k+1, Sa.k+1, Fa.k: Ia.k x Sa.k x Fa.k => {Sa.k+1, Ia.k+1, Fa.k+1})

(Iw.k, Sw.k, Iw.k+1, Sw.k+1, Fw.k: Iw.k x Sw.k x Fw.k => {Sw.k+1, Iw.k+1, Fw.k+1})

for all x in Su.k, Id.k.x in Ida.k.x -> Ia.k.x

for all y in Su.k+1, Id.k+1.y in Ida.k+1.y -> Ia.k+1.y

for all x in Su.k, Id.k.x in Idw.k.x -> Iw.k.x

for all y in Su.k+1, Id.k+1.y in Idw.k+1.y -> Iw.k+1.y

for all x in Su.k, Iw.k.x in Iwa.k.x -> Ia.k.x

for all y in Su.k+1, Iw.k+1.y in Iwa.k+1.y -> Ia.k+1.y

(Id.k+1, Sd.k+1, Id.k+2, Sd.k+2, Fd.k+1: Id.k+1 x Sd.k+1 x Fd.k+1 => {Sd.k+2, Id.k+2, Fd.k+2})

(Ia.k+1, Sa.k+1, Ia.k+2, Sa.k+2, Fa.k+1: Ia.k+1 x Sa.k+1 x Fa.k+1 => {Sa.k+2, Ia.k+2, Fa.k+2})

(Iw.k+1, Sw.k+1, Iw.k+2, Sw.k+2, Fw.k+1: Iw.k+1 x Sw.k+1 x Fw.k+1 => {Sw.k+2, Iw.k+2, Fw.k+2})

for all x in Su.k+1, Id.k+1.x in Ida.k+1.x -> Ia.k+1.x

for all y in Su.k+2, Id.k+2.y in Ida.k+2.y -> Ia.k+2.y

for all x in Su.k+1, Id.k+1.x in Idw.k+1.x -> Iw.k+1.x

for all y in Su.k+2, Id.k+2.y in Idw.k+2.y -> Iw.k+2.y

for all x in Su.k+1, Iw.k+1.x in Iwa.k+1.x -> Ia.k+1.x

for all y in Su.k+2, Iw.k+2.y in Iwa.k+2.y -> Ia.k+2.y

...

(Id.k+j-1, Sd.k+j-1, Id.k+j, Sd.k+j, Fd.k+j-1: Id.k+j x Sd.k+j-1 x Fd.k+j-1 => {Sd.k+j, Id.k+j, Fd.k+j})

(Ia.k+j-1, Sa.k+j-1, Ia.k+j, Sa.k+j, Fa.k+j-1: Ia.k+j x Sa.k+j-1 x Fa.k+j-1 => {Sa.k+j, Ia.k+j, Fa.k+j})

(Iw.k+j-1, Sw.k+j-1, Iw.k+j, Sw.k+j, Fw.k+j-1: Iw.k+j x Sw.k+j-1 x Fw.k+j-1 => {Sw.k+j, Iw.k+j, Fw.k+j})

for all x in Su.k+j-1, Id.k+j-1.x in Ida.k+j-1.x -> Ia.k+j-1.x

for all y in Su.k+j, Id.k+j.y in Ida.k+j.y -> Ia.k+j.y

for all x in Su.k+j-1, Id.k+j-1.x in Idw.k+j-1.x -> Iw.k+j-1.x

for all y in Su.k+j, Id.k+j.y in Idw.k+j.y -> Iw.k+j.y

for all x in Su.k+j-1, Iw.k+j-1.x in Iwa.k+j-1.x -> Ia.k+j-1.x

for all y in Su.k+j, Iw.k+j.y in Iwa.k+j.y -> Ia.k+j.y

Other than the requirements of equality of identical elements of the universe, all mappings (f in F) have the potential to induce errors. In the simplest case, even a function for duplication of a state can fail to produce equality because of uncertainty. Far more complex sorts of errors can occur. Of course the problem with an 'error' equation is that whether or not a transform is an error depends on the context in which it is applied. If the transform done by F is 'desired' by the party depending on it, it is not in error. Otherwise it is. Thus the same transform is an error or not an error depending on the context it is applied to. In the case of our model of passive observation, we have specifics in terms of errors being any mapping into a model that is not reflective of the meaningful states and state changes of interest to the observer.

Sequences of errors in F* may compound those errors. In addition, even if F* does not include errors of identity, the part of the transform from reality to models (~) represent portions of F* that might not meet desired F*. Furthermore, assuming that A builds a model of Sd, D can induce specific deceptions into Id that are not differentiable from the internally meaningful content within D. In other words, as long as Sd != Id, D can induce 'deception' states into Id via Sd=>Id that are indifferentiable by A from 'meaningful' states that result in Sd=>Id.

For Idw => Sw => Iwa => Sa errors are possible through:

[Idw.i]r j=> [Sw.i+j]r k=> [Iwa.i+j+k]r l=> [Sa.i+j+k+l]m AND <Idw.i>r j=> <Sw.i+j>r k=> <Iwa.i+j+k>r l=> <Sa.i+j+k+l>m

We still have the same sorts of errors except that these errors are made possible at more steps along the way.

The attacker wishing to gain increasing semantic value from a sequence of observations might combine observables into increasingly complex models of activities. This may also be the source of errors of the same sorts. Placements associated with attacker sensors (i.e., the size of Id relative to Sd) and capabilities of sensors, communications, and modeling (i.e., and the extent to which F accurately maps predecessor to successor) may limit the accuracy of these processes and models.

A contemporary example of this sort of attack mechanism is the typical network analyzer that takes a series of network packets from one observation point and fuses them together into a series of sessions associated with source and destination IP addresses and TCP, UDP, and other ports and protocols. Some of these analyzers are able to further process this data into IP 'sessions' such as the sequences that are used to fetch electronic mail from servers (e.g., the pop3 protocol). They then provide the means for the human user to request any particular pop3 session and produce a colorized report that shows this specific exchange exclusive of other packets with the communicating parties differentiated by color to facilitate ease of comprehension in the human cognitive system.

Possible failure modes in this process include the limited set of observables associated with the single observation point (i.e., Ida != Sd), failures in presentation of observed data to the computer observing the interface (i.e., F fails to achieve identity), failures in presenting that data to the user (i.e., F fails to map actual data into meaningful flashes of light for the user also known as inadequate (~), and failures of the same sort in the human system observing the computer's output. If we add the analytical components to this analysis, we gain failures in sequence reconstruction, reconstruction when no real sequences exist, failure to notice or resolve ambiguities, identification of ambiguities when there are none, and all of the various errors associated with making and missing content so richly available to humans and the cognitive systems they create.

Current packet analyzers typically go this far, but the typical overall attacker goes much further. The human may fuse sessions together into sets of interrelated protocols, analyze details of packet content for system identification, analyze timings and other data for distance, system characteristics, load levels, and so forth. The attacker might view packet content over time to seek to understand the participants and content associated with communications, try to fuse data from different sensors together to get a more complete picture, or try to fulfill other modeling objectives. It is noteworthy that all current tools make the implicit assumption that they operate in the static case with respect to A, D, W, and U described above. In other words, the tools ignore the possibility of changes in these items.

While these additional steps are not typically automated today, they are potentially automatable, and indications of advancing attack technology strongly support the notion that they will be automated some day. Whether the process is undertaken by a packet analyzer, the human analyst, or an organizational process, from our view, the attacker cognitive system is at work.

The sequence of observables can be characterized as the projection of the flow of cognitive processes in the defender into the attackers's observables. Whatever is to be modeled by the attacker can be thought of as a characterization of the cognitive processes of the defender that induced those observables. These two cognitive systems may not be commensurable in that the attacker's model may involve things that are very different from the things involved in the defender's cognitive system. For example, the defender may be emitting random bit sequences because of a hardware error and the attacker may be modeling these as a new encrypted protocol that must be broken through cryptanalysis. This problem of commensurability comes into play when the attacker or defender try to model the cognitive processes of the other in an effort to 'out think' them. The granularity, depth, accuracy, timeliness, and available resources for making the attacker's model result from the capabilities and intent of the attacker. The efficacy of the model may be affected by the defender's success at controlling the attacker's observables.

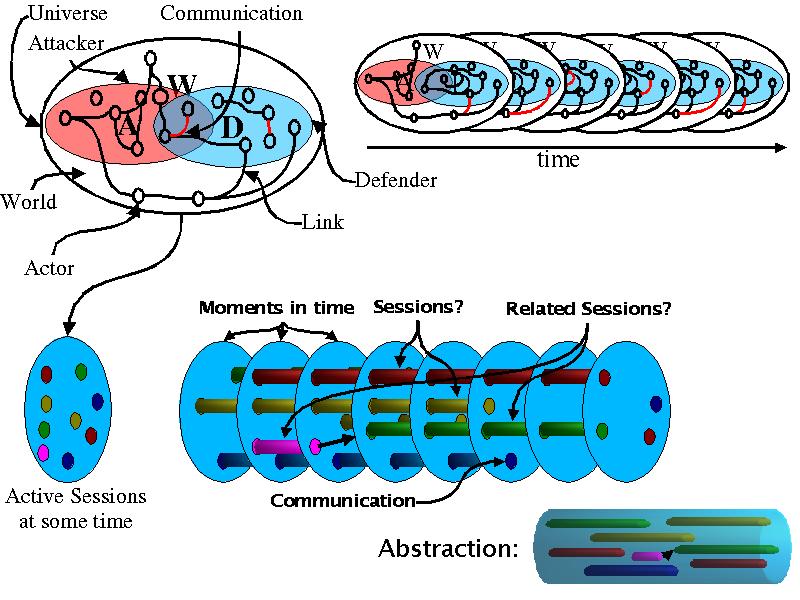

Now consider the case where an attacker is willing and able to risk increased exposure by inducing signals into the defender. This may have the affect of providing additional state information or altering states in the defender. Feedback from this process may return to the attacker through their observables. This situation is depicted in the following graphic.

By taking actions and observing the results of those actions, the attacker may affect state changes in and enhance their model of the defender. This process can be thought of as an active search of the defender's state space and an attempt to control the state of the defender also resulting in modification of the attacker's state space. Returning to the visual system, learning takes place in the brain in the form of rewiring neural connections and changing electrical signal strengths, neural activity levels, frequencies of neuron firings, changes in neurotransmitter levels, and so forth. Similarly, in our model, the attacker, defender and world states and topology may change. The state space and state of the universe may change txSu=>{S'u, S'u'}, and attacker, defender, and world subspaces may change (Sa x Sd x Sw x t) => {S'a, S'd, S'w}. Relativistic effects will be ignored for now and it will be assumed that the propagation of changes in the universe operate at a rate faster than is of interest to the cognitive systems under consideration.

As in the pure observational case, modeling limitations apply. The total state space of the defender cannot be explored and retained by the attacker unless the attacker has enough available state storage in addition to its own state machine operational needs to store all states and transitions associated with its model of the defender. The model may not be reflective of the true nature of the defender, or may not be adequate to fulfil the objectives of the attacker. The resolution cannot be adequate to create a perfect model except under very limited and unrtealistic ciccumstances. All of the issues that apply to the observation only case apply to the active case as well.

In searching the space, the attacker induces signals that affect the defender. If these are among the set of signals that can be observed by the defender's cognitive system in its state at the time of signal arrival, we call them observables. As the defender's state space is more thoroughly explored by the attacker, some signals and state sequences may be recognized by the defender's cognitive system as requiring specific action. This may trigger alterations in the defender's state, the reachable portions of the defender's state space, the defender's recognition system, and the observables available to the attacker and the defender. There may also be arbitrary delays between the occurrence of an observable and cognitively triggered actions associated with it.

The attacker and defender may also have overlapping elements and those elements may change over time. For example, communications may stop or start on different links, that attacker may take partial control of a defender's computer, there may be insiders planted in the defender's organization, elicitation, deflection, and so forth.

The topology of the attacker, defender, and world may change. New computers might be bought, deployed, positions changed, systems removed, employees hired, fired, changed, children born, death and failure of people and parts, and so forth.

These notions are different from the typical notions of state machine theory in that we are associating semantic differences between portions of the state space. This may be modeled as the division of the state space into subspaces in which different transition sets are applied, but strictly speaking, the division of the state space in such a manner is only useful to the extent that it grants us convenience for our characterization and analysis of the state machines.

As a reminder, the attacker and defender we are speaking of may be the combination of humans, other living creatures, and technologies, so the notion of semantic differences is sensible. Notions like changes in observables clearly apply to these systems because, as an example, the physiology of humans is such that detection thresholds for observables change based on changes in chemical compositions at sensor sites and neurotransmitters at neurons. Furthermore, the mechanisms we are discussing are not necessarily finite state machines. They involve continuous functions in complex and imperfect feedback systems. The brain literally rewires itself, and we are not anthropomorphizing when we bring up things like intent.

In the same manner as the attacker may affect the defender, the defender may affect the attacker through its actions. Presumably, it is the intent of the defender's cognitive system to optimize its overall function. To the extent that this is at odds with the attacker's objectives, these alterations to defender state may have detrimental affects on the attacker's ability to carry out its objectives. It is also possible that the defender's objectives are not at odds with the attacker's and that these state alterations will work to the attacker's benefit.

The result is, potentially, a battle between two cognitive systems; that of the attacker and that of the defender. The nominal objective of the attacker is to gain an adequately accurate model of the defender to induce desired states. The objective of the defender is to prevent undesired state changes in itself and the attacks, and assuming that vulnerabilities exist in its state machines and that the attacker may eventually encounter and exploit them, to affect the cognitive system of the attacker so as to control the cost of such success. We may refer to the purely observational process as a 'passive' attack and the process involving the induction of signals by the attacker into the defender as an 'active' attack.

All cognitive systems are limited. Whether it is a

result of finite memory, time, granularity, observables, performance,

operational range, design, or other factors, errors are possible in all

such systems. At the cognition levels of current and anticipated

systems, we can identify specific errors and error types, regardless of

the mechanisms involved. The complete set of 'errors' can therefore be

considered to consist of differences between the desired and actual

mappings between items and the models of those items, both in terms of

their states and state spaces and changes in their states and state

spaces. We then write the general set of errors as:

Errors := for each item i from U, A, D, W, a in A, d in D, w in W

[i]m !~ [i]r

<i>m !~ <i>r

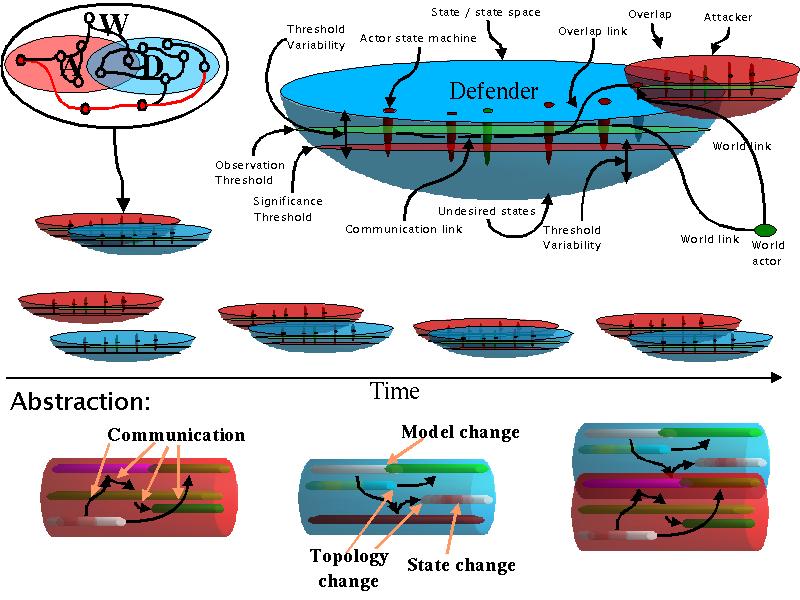

We start with the passive observation case for IP traffic and similar phenomena.

Observables can fail to reflect the total and actual content. In general this can be considered as a composition of missing existing data and making nonexistent data. In all cases of interest some data is missed, if only because of the inability to place unlimited granularity sensors at all points in the physical space of the defender. Thus all of the cognitive process can be seen in a similar light to black box testing. The lack of perfect observables leads to the unavoidable use of assumptions and expectations which in turn results in 'made' data.

The cognitive systems must make assumptions about the nature of the space between sensors in order to build a model. These assumptions combined with the qualitative and quantitative limits of the cognitive system produce the potential for errors in the form of a mismatch between the cognitive model and the real situation. These mismatches can be considered as a recursive combination of missed and falsely created sessions, associations, inconsistencies, and semantic content (a.k.a. understanding). The complexity limits of timely cognition also leads to limits on the accuracy of analysis.

A missed session is a series of interrelated observables that exists in the real system but are not properly modeled. A falsely created (made) session is a model of observables when no such relations exist. A missed association is a relationship between observables in the real system that is not cognitively modeled. A falsely created (made) association is a model of a relationship between observables when no such relationship exists. A missed inconsistency is the failure to detect the relationship between a set of observables that, if properly analyzed, would indicate the presence of an error or imperfect deception. A falsely created (made) inconsistency is a model of the presence of an error or imperfect deception which does not actually exist in the system. A misunderstanding is a semantic interpretation that is not in correspondence with the reality. Semantic interpretation introduces recursion because content and context are dictated by the possibly recursive languages and their syntax and semantics. These same sets of errors can occur at all recursive levels of syntax and semantics as can the misunderstanding errors associated with failure to detect a recursion which exists or falsely modeling a recursion when there is none. All of these error types apply to attacker and defender.

In the case of active experimentation, the errors associated with a passive process are augmented by errors associated with the attempt to search the state space of the system being modeled. These errors can be characterized as the making or missing of models or model changes, topologies and topological changes, communications, and states or state changes. It appears that, based on this overall model, these cases cover the set of all errors that can be made.

The cognitive processes associated with exploring a finite machine state space using a black box approach are problematic because of the inherent complexity of black box characterization. It is obvious that it is impossible in general to correctly explore the full state space of a sequential machine from a black box perspective because there may be states that are not reachable once certain input sequences have been encountered. Even if we restrict our interest to machines that can eventually revisit any previous state, the number of possible sequences for such systems is enormous. In the worst case, the length of the sequence required to characterize a finite state machine is exponential in the number of bits of state. Even for large classes of submachines such as those in typical computer languages and processor instruction sets, the size of this set is beyond all hope of exploring in detail. In the case of the systems under discussion, analog as well finite state machines are involved, so appeals to continuity of analog functions are necessary to even approach exploration of these state spaces. Because of digital / analog interactions, aliasing and similar phenomena come into play as well.

Similarly, the enormous number of possible states in most systems limits the ability to assure that only desired states occur. The design of most current computers is such that they are general purpose in function and transitive in their information flow. This guarantees that they are capable of entering many undesirable states and that it is impossible to accurately differentiate all desired from undesired states definitively and in any available amount of time.

The cognitive systems of attacker and defender are limited to non-definitive methods that use limited observables, modeled characteristics, and cognitive processing power to model opponent systems. The challenges in the conflict between attacker and defender are then; (1) for the attacker to select characteristics that provide the desired information and perform a series of observations and experiments that yield the desired states and observables in the defender and (2) for the defender to remain only in desired states and produce only desired interactions. In general, the defender is not limited in retaining its desired states to purely defensive methods. For example, a defender might maintain desired states by actively corrupting the attacker's cognitive model or state. Similarly, the attacker may have to defend itself in order to be effective against such a defender. Indeed, the situation is, at least potentially, symmetrical.

It is entirely possible that the objectives of the attacker and the objectives of the defender are not at odds with each other. In such a case, both the attacker and the defender may be able to operate freely without the need to consider interactions. In other cases, cognitive conflict will be carried out.

We have defined and described a model of errors in perception and conditions under which they occur. These error types appear to be unavoidable in any realistic situation in which organizations of humans and computers use cognitive means to analyze computer-related information. This model is a beginning, but surely not the end of this issue.

In the context of cognitive conflict between organizations of humans and computers, this model appears to provide a means by which we may analyze the limits of deception and counterdeception and devise systems and methods with optimal deception and counterdeception characteristics. But we have not yhet developed the mathematical results of this theory to the point where we can make practical use of it for the formation of equations or determination of the limits of specific systems or circumstances. NEvertheless, it is a beginning with potential toward this end.

In [3] some examples were given of specific deception mechanisms that induce specific cognitive errors. In the future, it is anticipated that a far richer set of mechanisms will be available with specific association to cognitive errors they induce, times associated with those errors, and reliability figures related to observation and computation characteristics of the cognitive systems attempting to induce and counter the induction of those errors.